Chapter 21 Modelling: Non-Linear Regression

We’ve touched upon the basics of modelling in R but it doesn’t have to stop there. This chapter will expand upon the contents of Modelling: Linear Regression to cover non-linear regressions. Since we can’t account for the myriad of models utilized throughout the field, we’ll work through a case-study.

21.1 Experimental Background

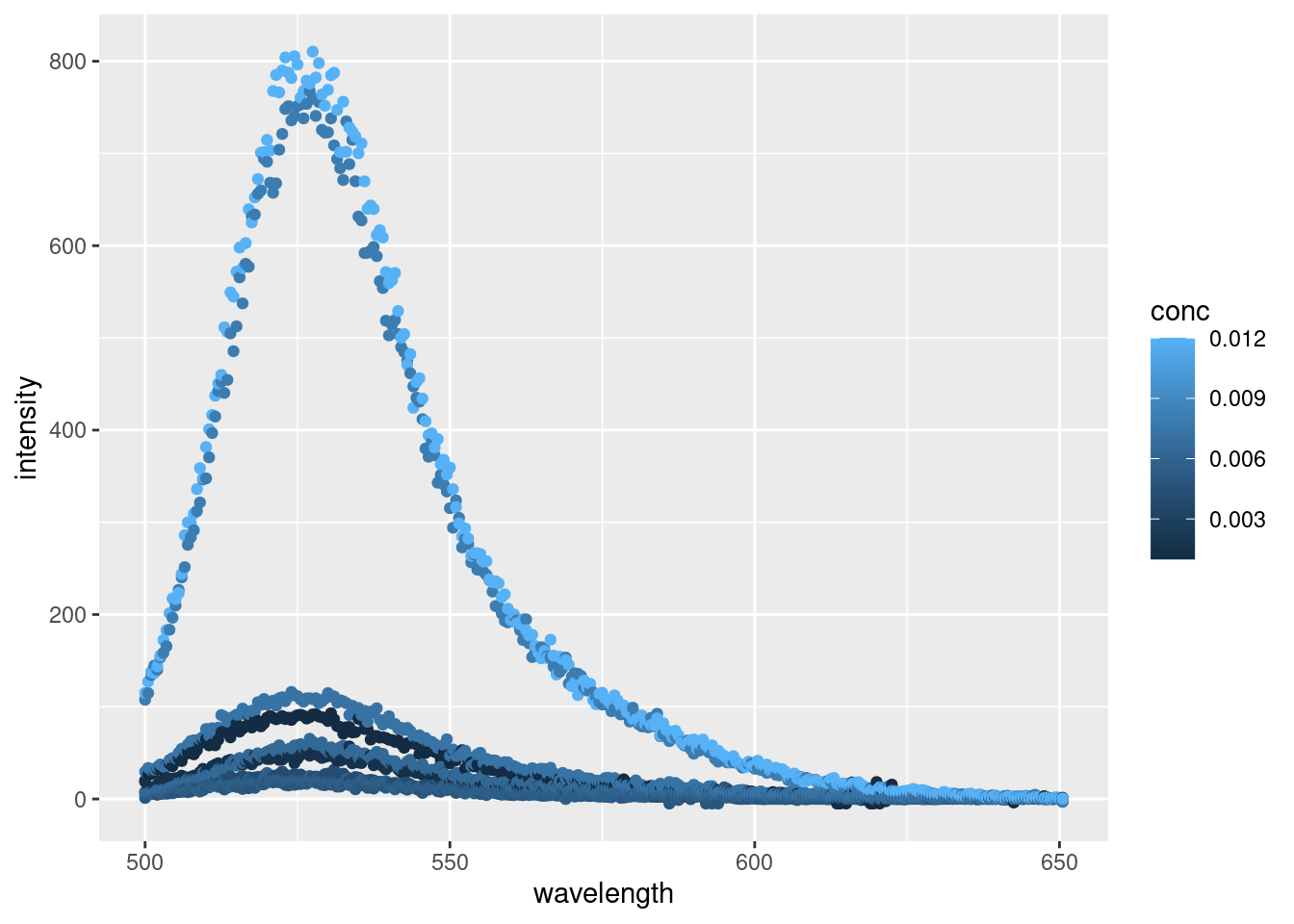

For this chapter we’ll be using data obtained from an experiment in CHM317. In this experiment, students measure the fluorescence of the fluorescent dye acridine orange in the presence of sodium dodecyl sulfate (SDS). In, or near, the critical micelle concentration of SDS, there is a sharp change in absorbance and fluorescence of the solution. Tracking these changes in fluorescence, students can then estimate the CMC of SDS. Experimentally, students prepared solutions of a constant concentration of acridine orange and varying concentrations of SDS. The emission spectrum of each sample was recorded, and we want to take the maximal of each spectra as a data point to build our model.

Let’s go ahead and import our data:

library(tidyverse)

sds <- read_csv("data/CHM317/fluoro_SDSCMC.csv") %>%

pivot_longer(cols = !`Wavelength (nm)`, # select all columns BESIDES `Wavelength (nm)`

names_to = c("conc", "conc.units", "chemical"),

names_pattern = "(.*) (.) (.*)",

values_to = "intensity",

names_transform = list(conc = as.numeric)

) %>%

rename(wavelength = 'Wavelength (nm)') # renaming column, less typing later on.

head(sds)## # A tibble: 6 × 5

## wavelength conc conc.units chemical intensity

## <dbl> <dbl> <chr> <chr> <dbl>

## 1 500 0.001 M SDS 20.0

## 2 500 0.0016 M SDS 18.6

## 3 500 0.004 M SDS 7.02

## 4 500 0.0048 M SDS 1.12

## 5 500 0.0056 M SDS 5.48

## 6 500 0.0064 M SDS 7.72And a quick plot to visualize our data:

Alright, alright, alright. Things are looking like we’d expect with some well behaved data. By plotting each point individually, we can really see the noise inherent with each reading. For a more robust analysis we’d typically conduct several replicates and average out the spectra for each concentration or apply some kind of model to smooth each peak. But today, we’re just interested in getting the maximal fluorescence emission intensity from each reading.

Let’s first annotate our plate to find the highest point, then go about extracting our data for analysis.

21.1.1 Annotating maximal values

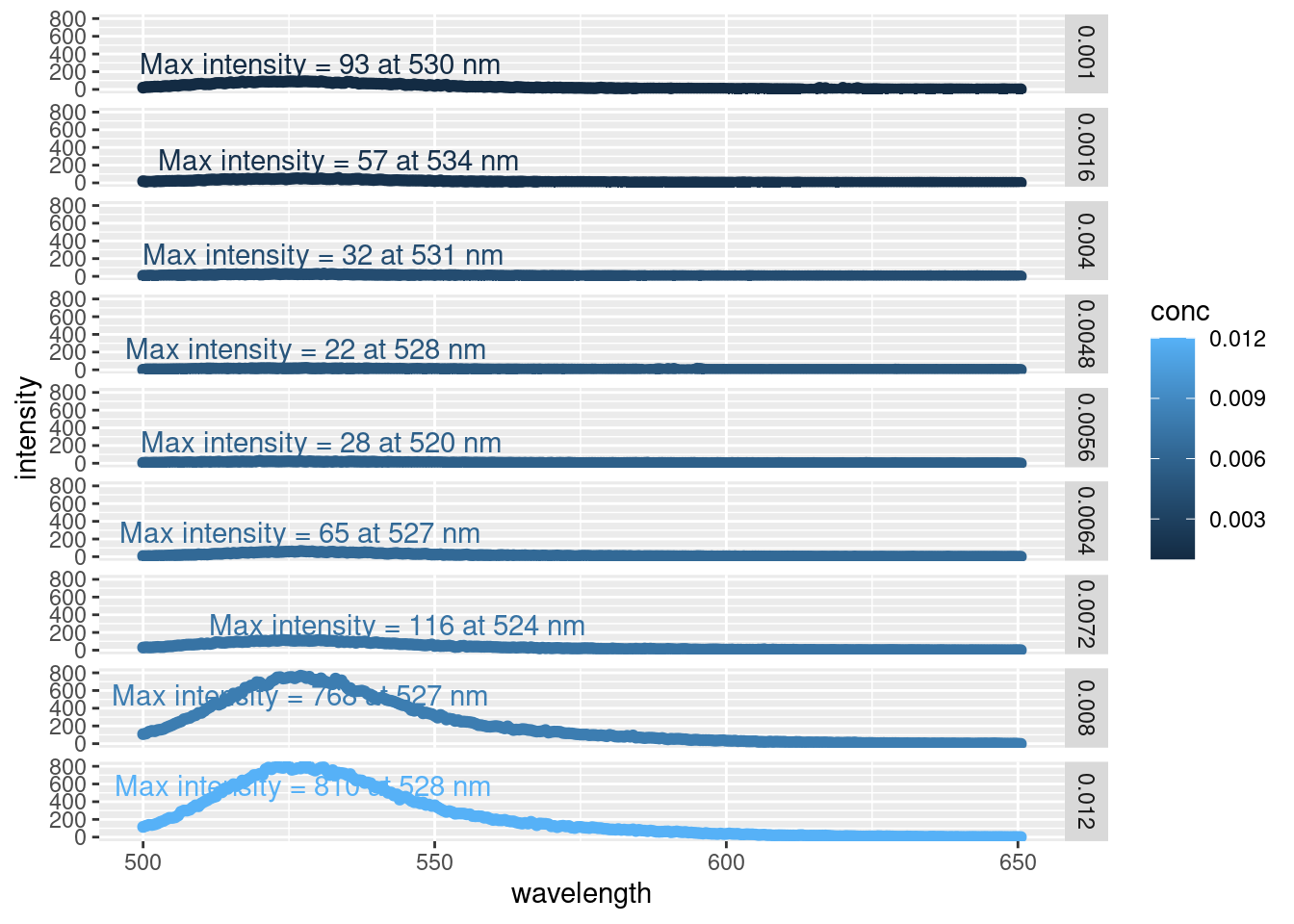

Annotating the maximal point on the plot will take a bit more code than actually obtaining it from the data. For this we’ll need to use the ggpmisc package which contains miscellaneous extensions for ggplot2, and ggrepel so our labels won’t overlap.

library(ggpmisc)

library(ggrepel)

ggplot(data = sds,

aes(x = wavelength,

y = intensity,

colour = conc)) +

geom_point() +

ggpmisc::stat_peaks(span = NULL,

geom = "text_repel", # From ggrepel

mapping = aes(label = paste(..y.label.., ..x.label..)),

x.label.fmt = "at %.0f nm",

y.label.fmt = "Max intensity = %.0f",

segment.colour = "black",

arrow = grid::arrow(length = unit(0.1, "inches")),

nudge_x = 60,

nudge_y = 200) +

facet_grid(rows = vars(conc))

By faceting the plot (i.e. arranging many smaller plots vs. one large one), we can easily see the increase in emission peak intensity as the concentration of SDS increases. Likewise, we can avoid the messy overlap of the max intensity annotations.

This is only one way to plot this data, but this is sufficient because we’re simply inspecting our data at this point. And here we can see that the intensity all occur around a similar wavelength (~ 528 nm)

21.1.2 Extracting maximal values

The plots we made above are great for inspecting our data, but what we really want is the maximal emission intensity value to calculate the CMC of SDS. We can see the maximal values on the plots, but there’s no way we’re typing those in manually. So let’s go ahead and get out maximal values from our dataset:

sdsMax <- sds %>%

group_by(chemical, conc.units, conc) %>%

filter(intensity == max(intensity)) %>%

ungroup()

head(sdsMax)## # A tibble: 6 × 5

## wavelength conc conc.units chemical intensity

## <dbl> <dbl> <chr> <chr> <dbl>

## 1 520 0.0056 M SDS 28.1

## 2 524 0.0072 M SDS 116.

## 3 527 0.0064 M SDS 65.3

## 4 527 0.008 M SDS 768.

## 5 528. 0.012 M SDS 810.

## 6 528 0.0048 M SDS 22.0All we did was tell R to take the row with the highest emission intensity value per group. We specified chemical, conc.units, and conc, in case we had more chemicals in our dataset.

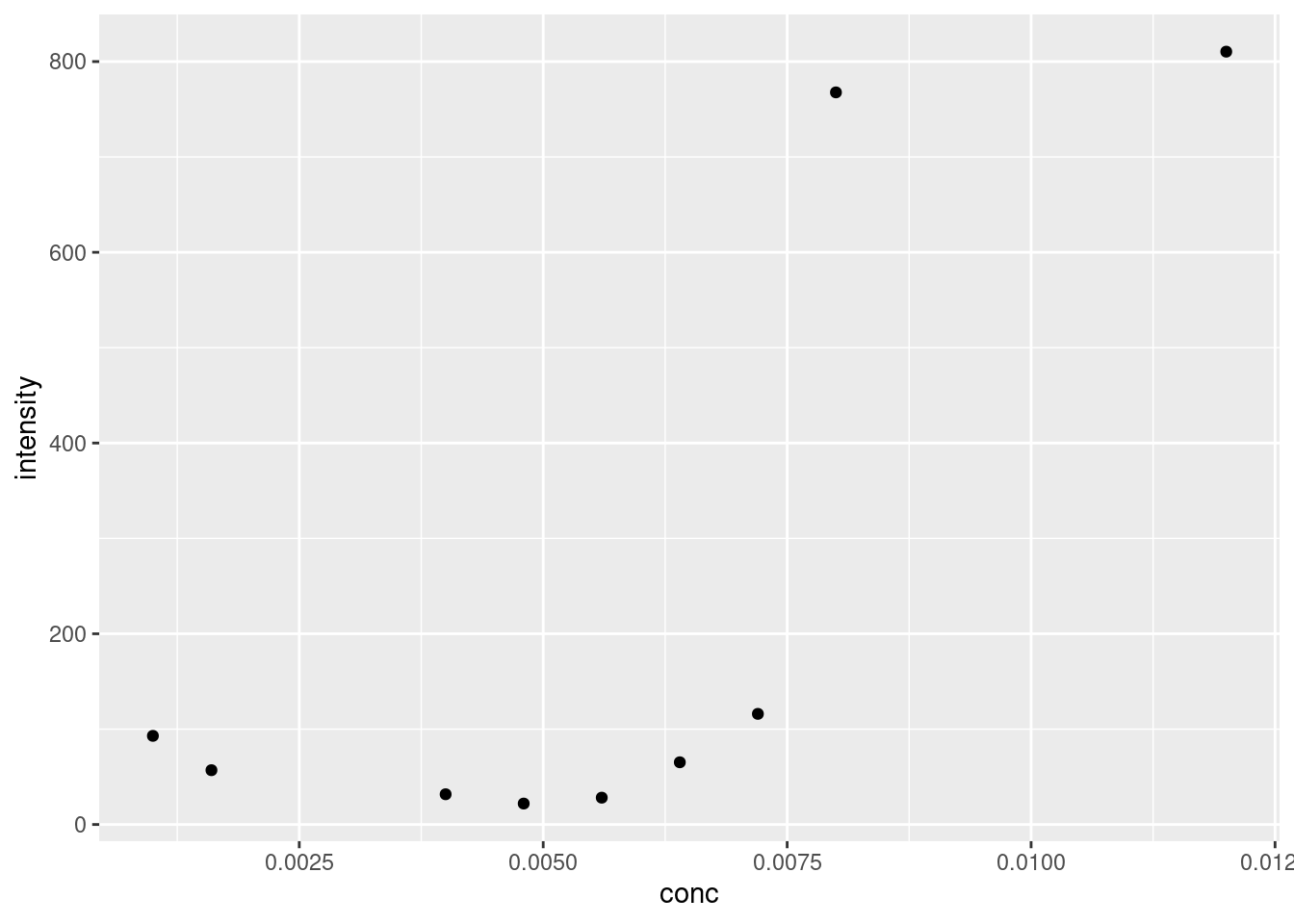

Our maximum values match those we see in our plot above. Let’s see how they stack up against each other:

Figure 21.1: Plot of maximal fluoresence intensity at various concentrations of SDS.

21.2 Modelling Sigmoid Curve

So we want to find the critical micelle concentration of SDS using the maximum fluorescence emission. The CMC is at the ‘midpoint of the sinusoid curve’. Which means we’ll need to a) plot a sinusoid curve and b) extract the midpoint.

The ‘sinusoid’ or ‘S-shaped’ curve mentioned in the lab manual is known as a logistic regression. Logistic regressions are often used to model systems with a largely binary outcome. In other words, the system starts at point A, and remains there for awhile, before ‘quickly’ jumping up (or down) to level B and remain there for the remainder. Examples include saturation and dose response curves.

For our CMC working data, the fluorescence intensity is low when the \([SDS] < CMC\), as micelles are not able to form. However once \([SDS] > CMC\), micelles form and the fluorescence intensity increases. We can see this trend in 21.1.

There are different forms of logistic regression equations. The simplest form is the 1 parameter, or sigmoid, function which looks like \(f(x) = \frac{1}{1+e^{-x}}\). The outputs for this function are between 0 and 1. We could apply this formula to our model if we somehow normalized our fluorescence intensity accordingly. An alternative is to use the four parameter logistic regression, which looks like:

\[f(x) = \frac{a - d}{\left[ 1 + \left( \frac{x}{c} \right)^b \right ]} + d\]

where:

- a = the theoretical response when \(x = 0\)

- b = the slope factor

- c = the mid-range concentration (inflection point)

- This is commonly referred to as the EC50 or LC50 in toxicology/pharmacology.

- d = the theoretical response when \(x = \infty\)

Why do we need such a complicated formula for our model? Well, looking again at 21.1 we see that the lower point is approximately 20, and not zero. Likewise, the upper limit appears to be around 825. The slope factor is necessary because the transition from the low to high steady state occurs over a small, but not immeasurable, concentration range. And lastly, by including the inflection point, we can calculate exactly for this value using R to get the CMC estimate.

21.2.1 Calculating Logistic Regression

A strength of R is its flexibility in running various models, and logistic regression is no different. We can use a number of packages to reach these ends, specifically the drc package contains a plethora of functions for modelling dose response curves (hence drc). However, for this example we’ll use a more generalized approach. Earlier we talked about linear regression, where we adjust the slope and intercept of a linear equation to best fit our data (see Calibration Curves). Recall that this optimization is based on minimizing the distance between the model and all of the experimental points (least squares). Well the stats package has a function called nls that expands upon this to nonlinear models. Per the nls function description: “[nls] determine[s] the nonlinear (weighted) least_squared estimates of the parameters of a nonlinear model.”

So we can create a formula in R based on the four-parameter logistic regression described above. After that, we’ll need to produce some starting details from which the model can build off of. If we don’t tell nls where to start, it can’t function, as the search space is too large. Looking at 21.1, the intensity appears to floor around 20; the intensity appears to max out around 820; the midpoint appears to be around 0.0075 M, and let’s say the slope is 1. Remember, these are starting values from which nls starts to optimize from, and not the actual values used to construct the model.

So, let’s create our model

logisModel <- nls(intensity ~ (a-d)/(1 + (conc /c)^b) + d,

data = sdsMax,

start = list(a = 20, # min intensity

b = 1, # slope

c = 0.0075, # CMC

d= 820) # max intensity

)## Error in numericDeriv(form[[3L]], names(ind), env, central = nDcentral): Missing value or an infinity produced when evaluating the model… and we get an error message. Get used to these when modelling! Don’t worry about understanding it completely, error messages are often written with programmers in mind so they can be a bit cryptic. You can often copy and paste these directly into any search engine to get some more information, but this one is simple enough: we either have a missing value or an infinity produced. Well we have six input parameters in our model: a, b, c, d, our independent variable conc, and our dependent variable intensity. We’ve also supplied starting values to all of them via the list we created inside the function. Therefore, one of our starting values must be too far off from a plausible start point and is causing troubles in the nls function. They all look good except for the slope start value b = 1.

The slope here is an approximation for the slope between the min value a and max value d. Looking at our data in 21.1, that slope may be a bit shallow considering the large jump in intensity. Let’s increase the value of b and try again:

logisModel <- nls(intensity ~ (a-d)/(1 + (conc /c)^b) + d,

data = sdsMax,

start = list(a = 20, # min intensity

b = 10, # new slope

c = 0.0075, # CMC

d= 820) # max intensity

)Eh, no errors! Once you progress beyond simple linear regressions, modelling becomes more of a craft. If we were trying to apply this model to multiple datasets, we would probably want to shop around cran to find a package with self-starting models. This way we can circumvent having to supply starting parameters. Anyways, that’s for another day.

For now, let’s take a look at our model outputs which are all stored in the logisModel variable. To this end, we’ll use the broom package discussed in Modelling: Linear Regression. Specifically, we’ll use tidy to get an output of our estimated model parameters (i.e. a,b,c, and d), and augment for a data frame of containing the input values, and the estimated intensity values.

Let’s look at our fitted values:

## # A tibble: 9 × 4

## intensity conc .fitted .resid

## <dbl> <dbl> <dbl> <dbl>

## 1 28.1 0.0056 49.4 -21.3

## 2 116. 0.0072 116. -0.123

## 3 65.3 0.0064 49.7 15.6

## 4 768. 0.008 768. 0.0977

## 5 810. 0.012 810. -0.0861

## 6 22.0 0.0048 49.4 -27.5

## 7 93.0 0.001 49.4 43.5

## 8 31.7 0.004 49.4 -17.7

## 9 57.0 0.0016 49.4 7.51What we can see here from augment are the intensity and conc values we inputted into R. .fitted are the intensity values for a given concentration fitted to out model, and .resid is the residuals, the difference between the actual and estimated values.

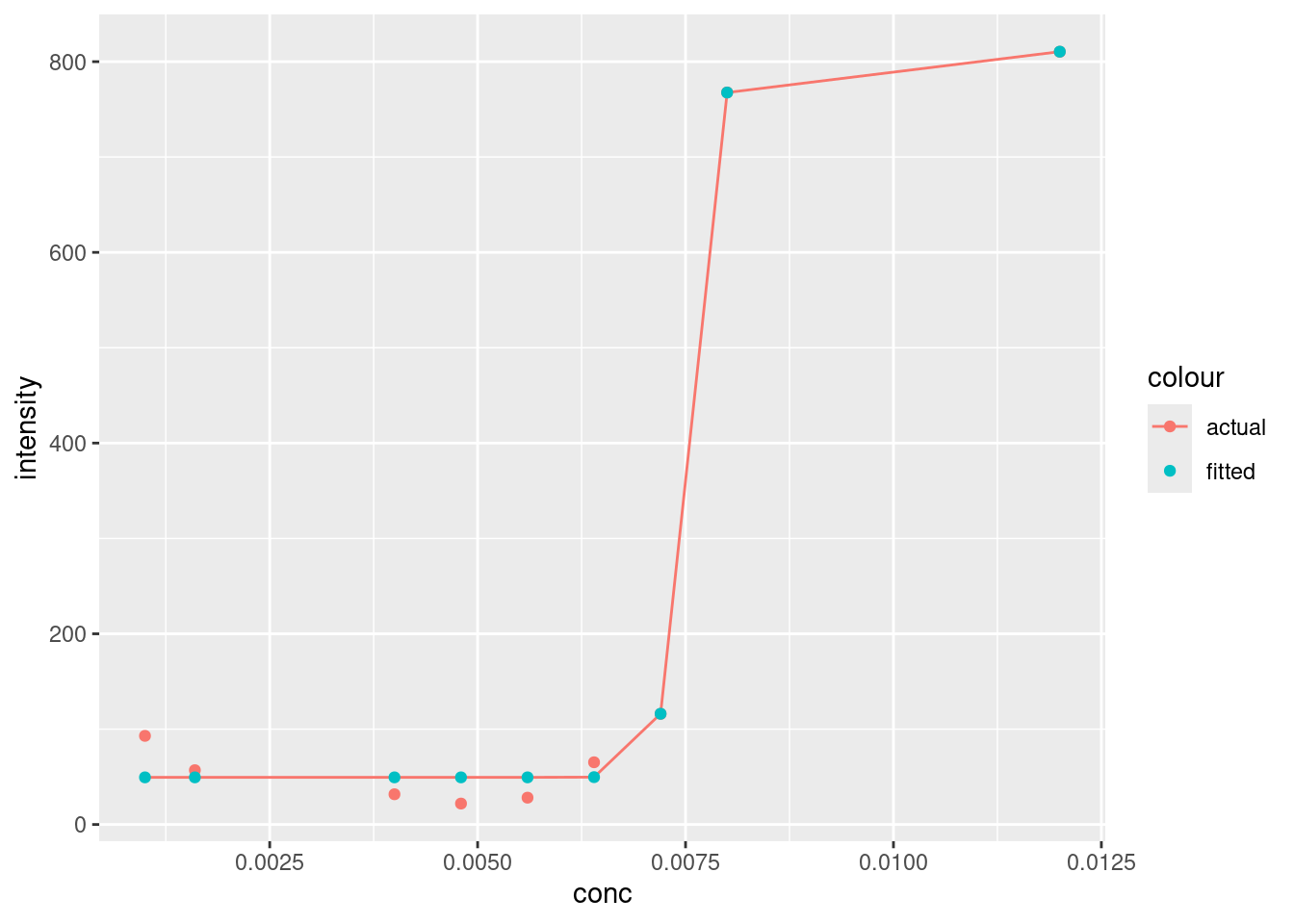

Let’s go ahead and plot our actual and fitted values against each other.

ggplot(augment, aes(x = conc, y = intensity, colour = "actual")) +

geom_point() +

geom_line(aes(y = .fitted)) +

geom_point(aes(y = .fitted, colour = "fitted"))

Looks pretty good, although it’s interesting how the baseline at lower concentrations doesn’t plateau like the model values. You’ll note that the line produced by geom_line will only draw a straight line between points. There are ways to address this, but we don’t need to for our needs right now.

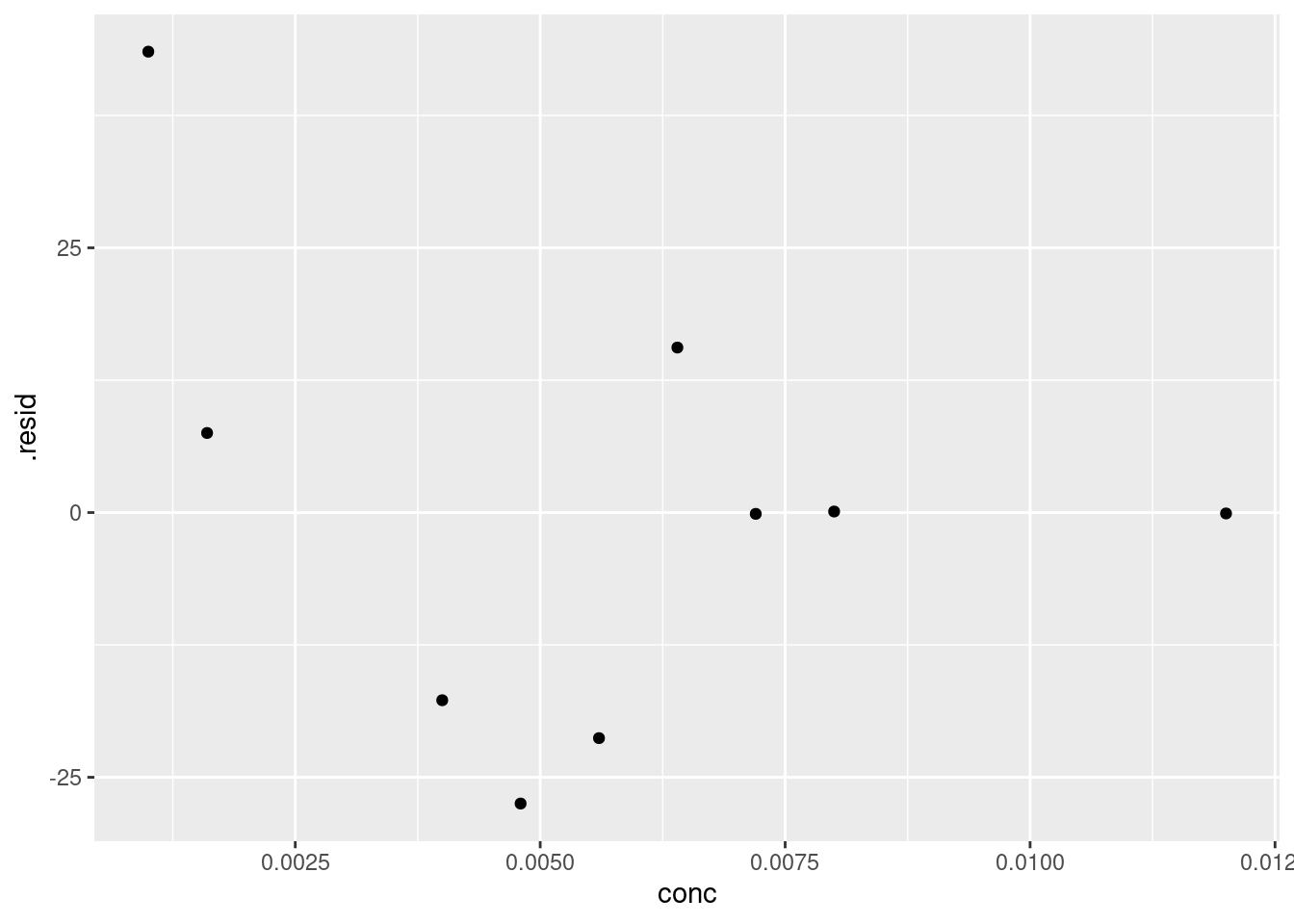

There doesn’t appear to be any gross outliers in our model, so it seems to have done a good job. We can verify this by checking the residuals(see [Plotting residuals]):

We can’t see any obvious patterns in the residuals (i.e. all are negative), so we can have further confidence in the fit of our model.

21.2.2 Extracting model parameters

To extract the model parameters a, b, c, and d we can use the tidy function:

## # A tibble: 4 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 a 49.4 11.1 4.44 0.00678

## 2 b 49.0 9.65 5.07 0.00385

## 3 c 0.00755 0.0000785 96.2 0.00000000230

## 4 d 810. 27.3 29.7 0.000000808Looking past the scientific notation, our model values are pretty similar to what we estimated. Specifically, c, our midpoint value is 0.0076 M. Not too bad from our original estimate. And recall that the midpoint of our curve corresponds to the critical micelle concentration of SDS, which we’ve estimated to be 0.0076M. Not too far from the literature value of 0.0081 M.

21.3 Summary

In this chapter we reviewed non-linear modelling using a case study with four-parameter logistic regression. While the equation covered here might not be the one you need, the steps are identical:

- Tidy and visually inspect your data to see and patterns

- Determine which mathematical model you’ll be using

- Use the

nlsor other suitable package to calculate your model; you may need to tinker around with the starting values, estimate them from your data. - Verify your model outputs (both fitted and residuals).

Lastly, we’ve also touched upon labelling maximal values in a plot using the ggpmisc package. Notably useful for determining local peaks in spectroscopy data.

21.4 Exercise

There is a set of exercises available for this chapter!

Not sure how to access and work on the exercise Rmd files?

Refer to Running Tests for Your Exercises for step-by-step instructions on accessing the exercises and working within the UofT JupyterHub’s RStudio environment.

Alternatively, if you’d like to simply access the individual files, you can download them directly from this repository.

Always remember to save your progress regularly and consult the textbook’s guidelines for submitting your completed exercises.